Getting Django on Heroku prancing 8 times faster.

Posted by tkopczuk on July 18, 2011

We’ve seen how to deploy Django on Heroku’s new Cedar stack. Now let’s make it better, blazingly fast, and all on a single – free – instance.

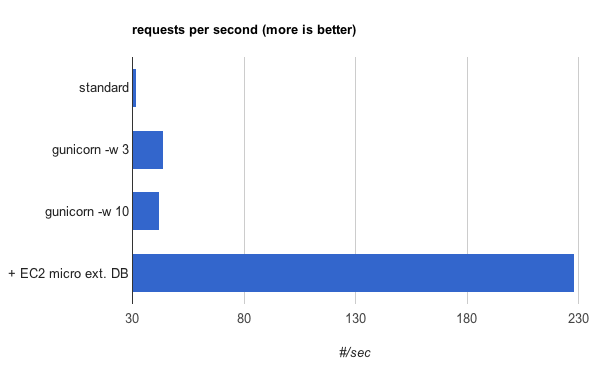

TL;DR – you’ll achieve this if you read on

Summary for starters – running Django and gunicorn with multiple workers on a single free Heroku node is possible. Combined with an external PostgreSQL instance coupled with connection pooling for a massive 8x speedup. And it can actually be free.

Application performance on a single Heroku node

Using your own database instead of Heroku‘s dedicated DBs will save you huge amounts of money (see Stage 3 below).

Making a Heroku-ready Django application

Get heroku gem:

gem install heroku

Now you have to create a new project or migrate your old one.

Project layout is important.

- Main repository folder

- → Django Project

- → <…>

- → settings.py

- → run_heroku_run.sh

- → requirements.txt

- → Procfile

- → Django Project

We’ll use our old sample project called The Tuitter, which is available .

Just do:

git clone https://github.com/tkopczuk/ATP_Performance_Test

I will assume you are using this sample application. If you want to create a new project from a scratch, here’s a shell script to do that for you: . But remember to change paths accordingly.

Now cd to your SITE_ROOT, create your new Heroku instance and deploy!

heroku create --stack cedar git push heroku master

Create all the database tables:

heroku run bin/python ATP_Performance_Test/manage.py syncdb

Ready!

Case study

Stage 1

Demo is available here: http://quiet-mountain-395.herokuapp.com/jinja2/

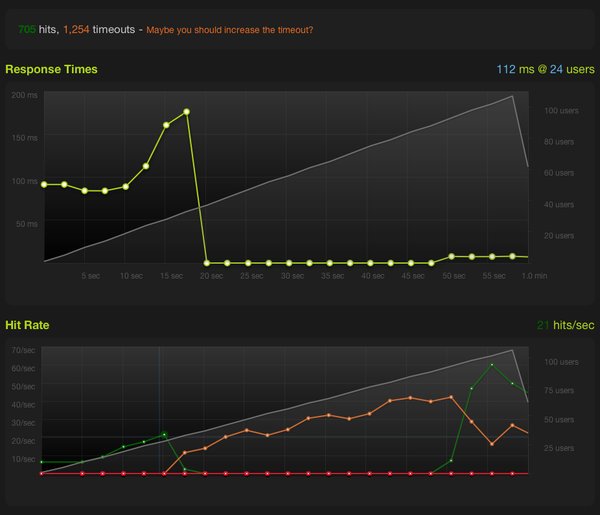

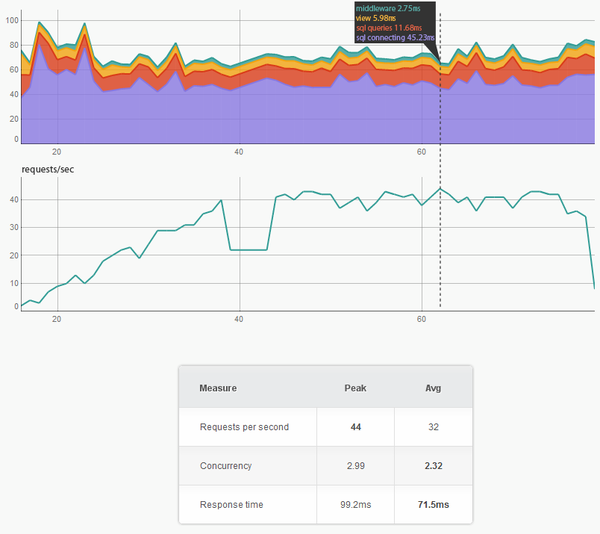

Tests are done with the app warmed up, and the DB filled with test data. So what are we starting with? According to blitz:

Stage 1 – python manage.py runserver

That’s bad, but what did we expect from the development server? What are we bound by?

Stage 1 – performance analysis

So actually it’s connecting to PostgreSQL. We only have one process on a free node, so we can either try connection pooling and get it down to ~10 ms on one process or – well – make it concurrent somehow and try Heroku’s limits. We’ll go for the limits and then pool.

Why don’t we use gunicorn?

Stage 2

Setup – how to get a unicorn prancing

If you just enter gunicorn into your requirements.txt file – it won’t work. Heroku is actually compiling all the packages with the virtual environment in a different location and we end up with messed up paths – it will not start.

But if we reinstall it using pip in our run_heroku_run.sh just after . bin/activate:

pip -E . install --upgrade gunicorn

It will run just well.

Herding them unicorns

Let’s get the unicorns together. If we tweak the -w parameter we can actually make gunicorn work concurrently. Might be a bug or might be a feature left for us by Heroku. Nevertheless remember about Heroku limits you have to abide with, set in their Acceptable Use Policy. It limits ie. RAM usage, so having too many workers will cross that boundary, be gentle. If you need to scale – you’ll still need to buy more nodes, this is a how to on getting the most out of a single node.

Change the last line in run_heroku_run.sh:

../bin/gunicorn_django -b 0.0.0.0:$PORT -w 3

Altogether it should look like this:

#!/bin/bash . bin/activate bin/pip-2.7 -E . install --upgrade gunicorn cd ATP_Performance_Test ../bin/gunicorn_django -b 0.0.0.0:$PORT -w 3

First of all let’s just say this – 100 workers won’t be 5 times better than 20 workers. In case of database throttling it’s like 100 shoppers queuing to one till. Though they might motivate the shop assistant to pull his finger out.

Performance introduction

Information from Heroku on HTTP routing:

“The heroku.com stack only supports single threaded requests. Even if your application were to fork and support handling multiple requests at once, the routing mesh will never serve more than a single request to a dyno at a time.”

It does not seem to be so.

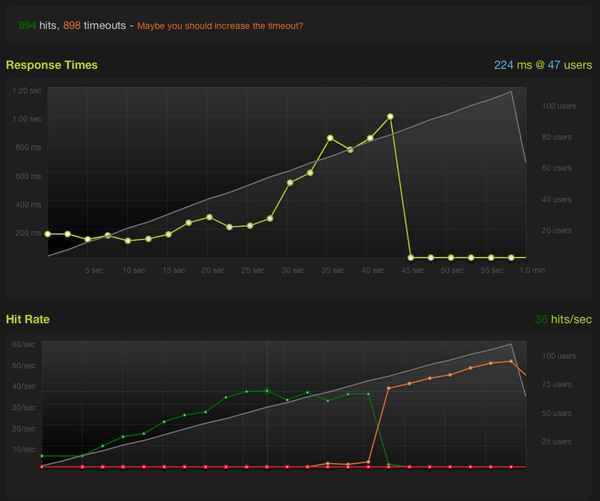

1 gunicorn worker is actually slightly slower than manage.py. 3 workers? Let’s see:

Performance – 3 unicorn workers

Starting easy.

Demo is available here: http://fierce-sunset-334.herokuapp.com/jinja2/

Stage 2 – gunicorn, 3 workers

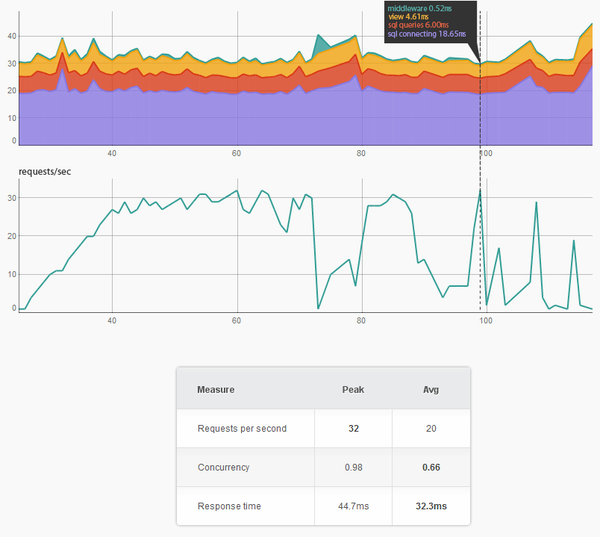

Stage 2 – gunicorn, 3 workers – performance analysis

42 requests per second, that’s 1.5 times better. It might not look like that much of an improvement, but it actually is. For example it’s enough to stop our database test data filler getting errors.

If we look at the performance metrics – we are spending even more time connecting to the DB. This means, that the number of connections to the PostgreSQL database on the free plan is throttled. It tells us that we really should try another database with connection pooling right now. But we won’t yet – let’s push the beast to its limits.

Performance – 10 unicorn workers

Demo is available here: http://vivid-summer-485.herokuapp.com/jinja2/

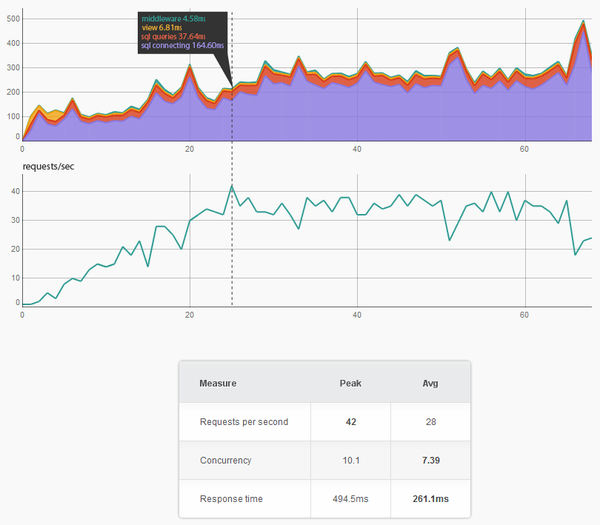

Stage 2 – gunicorn, 10 workers

Stage 2 – gunicorn, 10 workers – performance analysis

So we’ve hit the limit, it’s worse. Quick glance at the metrics shows that we’ve encountered a DB meltdown, it is throttled for sure. We won’t get more out of it.

Stage 3

Let’s do something about it – the simplest solution would be firing up an EC2 instance, install PostgreSQL on it and try again. As it happens a micro instance is essentially free lately.

We recommend getting a proper VPS, but let’s do it the easy way. Micro instance, Ubuntu Natty Narwhal Server, PostgreSQL 8.4, connection pooling using pgBouncer – the easy way.

Setup – Heroku prancing with external PostgreSQL database

- Start up a new EC2 micro instance, choose an Ubuntu AMI.

- Modify your EC2 security group:

- allow SSH (TCP port 22) access from your own IP

- allow postgres (TCP port 5432) access from everywhere (0.0.0.0/32)

- allow pgBouncer (TCP port 6432) access from everywhere (0.0.0.0/32)

- SSH as root to the EC2 instance:

- Run:

sudo apt-get install postgresql

- Modify /etc/postgresql/8.4/main/postgresql.conf, insert:

listen_addresses = '*'

- Modify /etc/postgresql/8.4/main/pg_hba.conf, insert (or modify the strings to your own liking):

host atp_performance_test atp_performance_test 0.0.0.0/0 md5

- Run:

sudo su - postgres psql -c "CREATE DATABASE atp_performance_test" psql -c "CREATE USER atp_performance_test \ WITH PASSWORD 'atp_performance_test'" psql -c "GRANT ALL PRIVILEGES ON DATABASE \ atp_performance_test TO atp_performance_test" - Install and setup pgBouncer as described in our previous post: Django and PostgreSQL – improving the performance with no effort and no code.

- Run:

-

Modify your settings.py file, at the bottom add:import os os.environ["DATABASE_URL"] = \ "postgres://atp_performance_test:atp_performance_test@\:6432/atp_performance_test" heroku config:add DATABASE_URL=\ postgres://atp_performance_test:atp_performance_test@\

:6432/atp_performance_test Much nicer way thanks to .

Last step will prevent Heroku from injecting it’s own database settings (as we are overriding it). That’s the way to use external databases with Heroku.

Now the usual:

git push heroku master

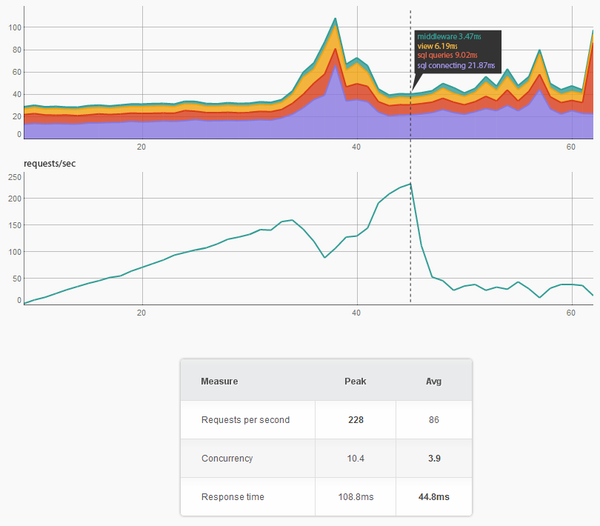

Performance – Django on Heroku with external PostgreSQL DB run on free EC2 micro

Let’s try pushing it harder:

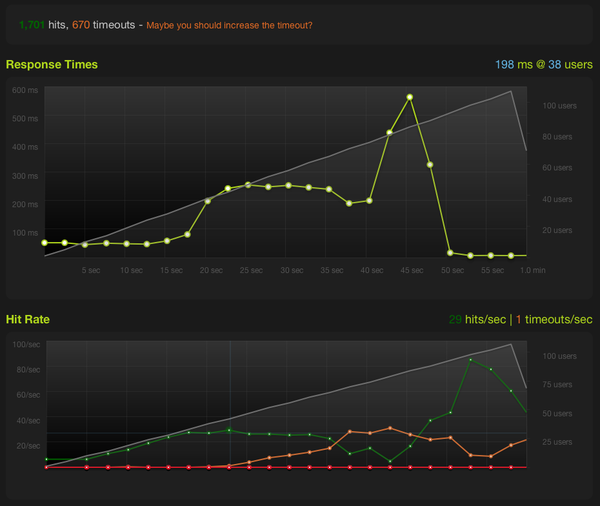

Stage 3 – gunicorn, 10 workers, external database on a free EC2 micro

Stage 3 – gunicorn, 10 workers, external database – performance analysis

Whoa, That’s quite amazing. 200 requests per second with no effort. It should not be slower if we took the workers count down to about 3~4, as we’re I/O bound.

It peaked at 228.0 requests/second and the DB started to fail. Database times are not so low because of EC2 instance being away from the Heroku node.

That’s almost 8x what we started with, all for free (with the micro instance and the free tier )!

Summary

We can deduct that Heroku has one hell of a infrastructure as CPU has never bounded the requests. Apparently the routing mesh is not connection throttled, the database is. If it was not so, we would have to have a response time of 4 ms to do 225 requests per second (simple division).

Consider taking your DB away from Heroku (but be cautious – it has to be close to the Heroku nodes – check it with a simple Apache Benchmark test), as it will give you a massive speedup for a price much lower than Heroku’s $200/mo for the cheapest Ronin dedicated database.

Next week Marcin Mincer () will write about deploying Django automagically on multiple servers, with automatic minification, gzipping and deployment of static files to the S3 cloud.

Follow us on Twitter or grab our !

(–) Tomek Kopczuk

Comments

Pingback: black-glass » A good app is a FAST app()

Pingback: Testing your website with load software()

Pingback: Tuning gunicorn and Django performance on Heroku with blitz.io | The colorful wolf()

Pingback: Python:Error R14 (Memory quota exceeded) Not visible in New Relic – IT Sprite()